Explaining the Absorption Features of Deep Learning Hyperspectral Classification Models

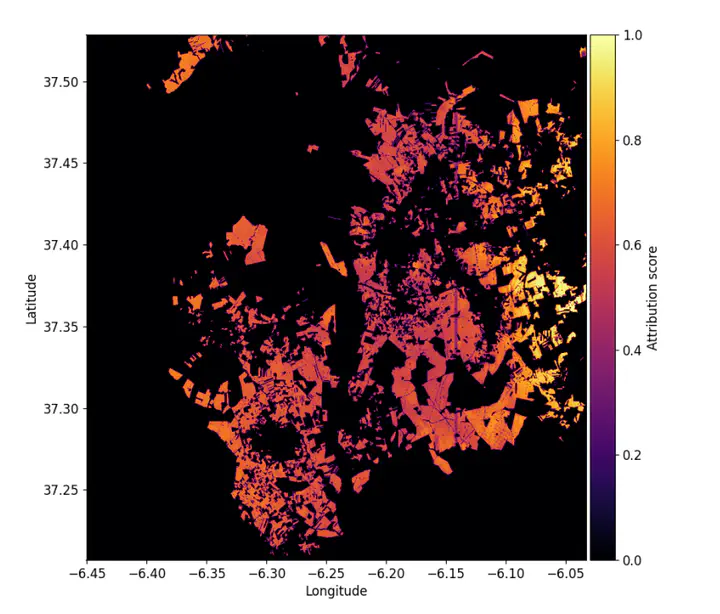

Saliency Map of Hyperspectral Classification Model

Saliency Map of Hyperspectral Classification Model

Abstract

Over the past decade, Deep Learning (DL) models have proven to be efficient at classifying remotely sensed Earth Observation (EO) hyperspectral imaging (HSI) data. Those models show state-of-the-art performances across various bench-marked data sets by extracting abstract spatial-spectral features using 2D and 3D convolutions. However, the black-box nature of DL models hinders explanation, limits trust, and underscores the need for profound insights beyond raw performance metrics. In this contribution, we implement a simple yet powerful mechanism for the explainability of DL-based absorption features using an axiomatic approach called Integrated Gradients, and showcase how such an approach can be used to evaluate the relevance of a network’s decisions, and compare network sensitivities when trained using single and dual sensor data.