Biography

I am currently working towards my PhD thesis in the Applied Mathematics Department at the University of Cambridge. In this stimulating environment, I am learning to become a well-rounded Machine Learning researcher.

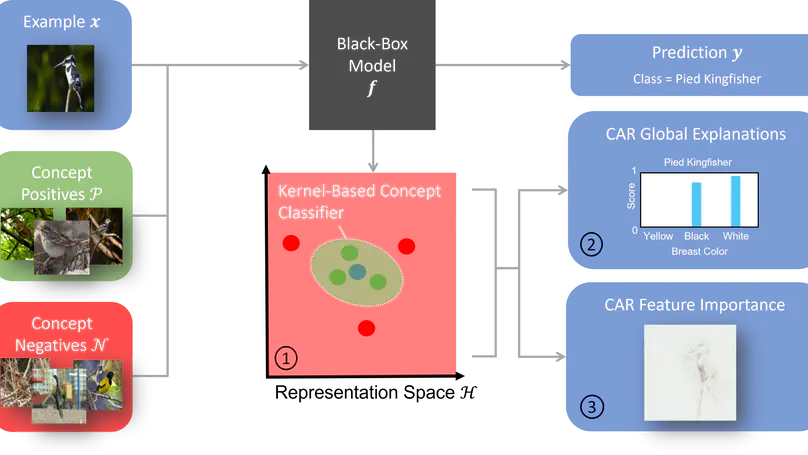

We are gradually entering in a phase where humans will increasingly interact with generative and predictive AIs, hence forming human-AI teams. I see an immense potential in these teams to approach cutting-edge scientific and medical problems. My research focuses on making these teams more efficient by improving the information flow between complex ML models and human users. This touches upon various subjects of the AI literature, including ML Interpretability, Robust ML and Data-Centric AI. In some sense, my goal is to build this microscope that would allow human beings to look inside a machine learning model. Through the interface of this microscope, human beings can rigorously validate ML models, extract knowledge from them and learn to use these models more efficiently.

Download my resumé.

- Interpretability

- Robust ML

- Generative AI

- ML for Science and Healthcare

- Representation Learning

- Data-Centric AI

-

PhD in Applied Mathematics, 2020-2024

University of Cambridge

-

MASt in Applied Mathematics, 2018-2019

University of Cambridge

-

M1 in Physics, 2017-2018

Ecole Normale Supérieure Paris

-

Bachelor in Engineering, 2014-2017

Université Libre de Bruxelles

Skills

Experience in big-tech companies, implementation of SOTA ML

Strong mathematical background, my publications have strong theoretical components

Presentation of my research at many prestigious venues (NeurIPS, ICML, ICLR)

~50% of my publications are the result of collaborative work

~50% of my publications are the result of autonomous work

Supervision of several MPhil and PhD students, creation of pedagogical YouTube videos

Experience

- Conducted research in the Machine Learning Research team.

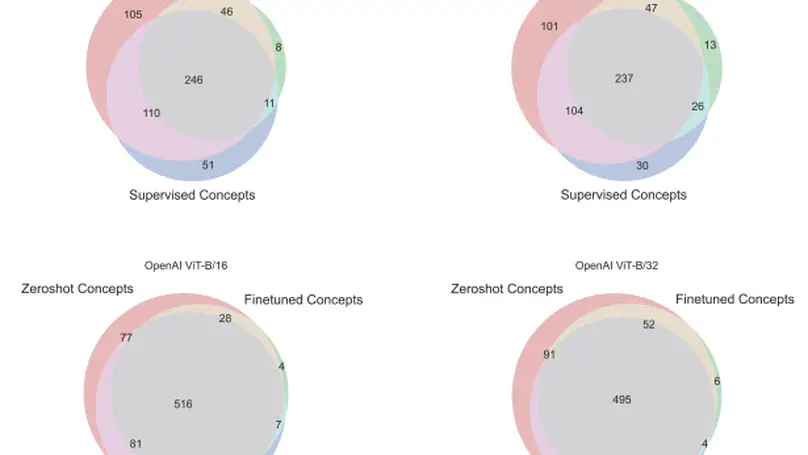

- Discovered outlier features and high polysemanticity in zeroshot CLIP models.

- Published results in a paper.

- Conducted research in the AI4Science team.

- Contributed to the development of generative ML techniques for material discovery.

- Presented findings in front of AI4Science team, results will be integrated in a big paper.

- Conducted research in various sub-fields of machine learning.

- Published several papers in top-tier conferences (NeurIPS, ICML).

- Supervised the research of several MPhil/PhD students.

- Conducted research in machine learning applied to quantitative finance.

- Learned to turn raw financial data into predictive features.

- Presented findings in front of quant managers.

- Conducted research in black holes physics.

- Created several pedagogical videos to help young students with maths and physics.

- Responsible of physics example classes for first year pharma students.

- Conducted research in quantum field theory and cosmology.

- Implemented numerical solver for simulating the evolution of a toy cosmological model.

- Demonstrated the emergence of a new type of singularity called caustics.

Accomplishments

Featured Publications

We investigate what makes multimodal models that show good robustness with respect to natural distribution shifts (e.g., zero-shot CLIP) different from models with lower robustness using interpretability.

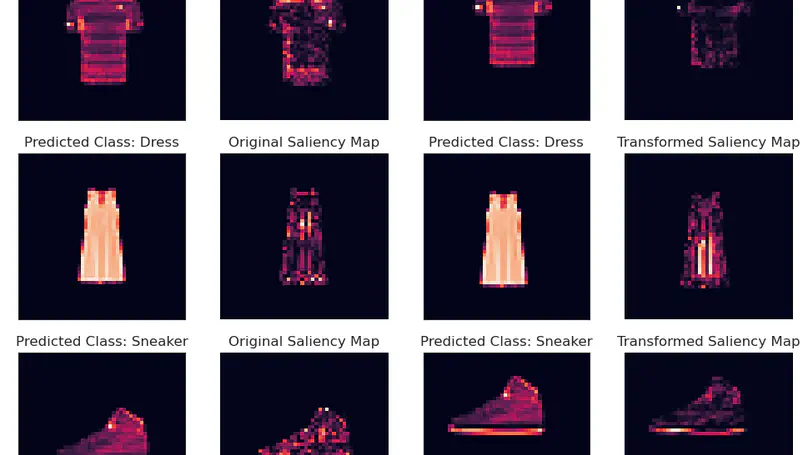

We assess the robustness of various interpretability methods by measuring how their explanations change when applying symmetries of the model to the input features.

Recent Publications

Contact

- jonathan.cr1302@gmail.com

- Centre for Mathematical Sciences, Wilberforce Rd, Cambridge, CB CB3 0WA

- Pavilion G, Office G0.01